Generative AI regulation: Innovation and accountability

It is no longer a question of whether generative AI will change the world, but how. This technology is already transforming the way we write code, communicate, source and consume information.

The potential future benefits of these advancements are monumental. However, these large language models, and other forms of generative AI, also present risks that not only threaten the usefulness of the sourced information but also prove to be damaging in unintentional ways.

Sensible regulation is critical to foster responsible use of generative AI while mitigating potential negative impacts.

The onus in developing such regulations naturally falls onto governments and regulatory bodies. But, if we in the business community have the capability to leverage the power of generative AI, we should also take on the responsibility for helping develop appropriate guardrails and inputs on regulations to govern these technologies.

We must self-govern and contribute to principles that can become regulations for all concerned.

At EXL, we would advocate the following two core principles:

A. Apply generative AI only to closed data sets initially to ensure safety and confidentiality of all data.

B. Ensure that the development and adoption of use cases leveraging generative AI have the oversight of professionals to ensure “humans in the loop”.

These principles are essential for maintaining accountability, transparency, and fairness in the use of generative AI technologies.

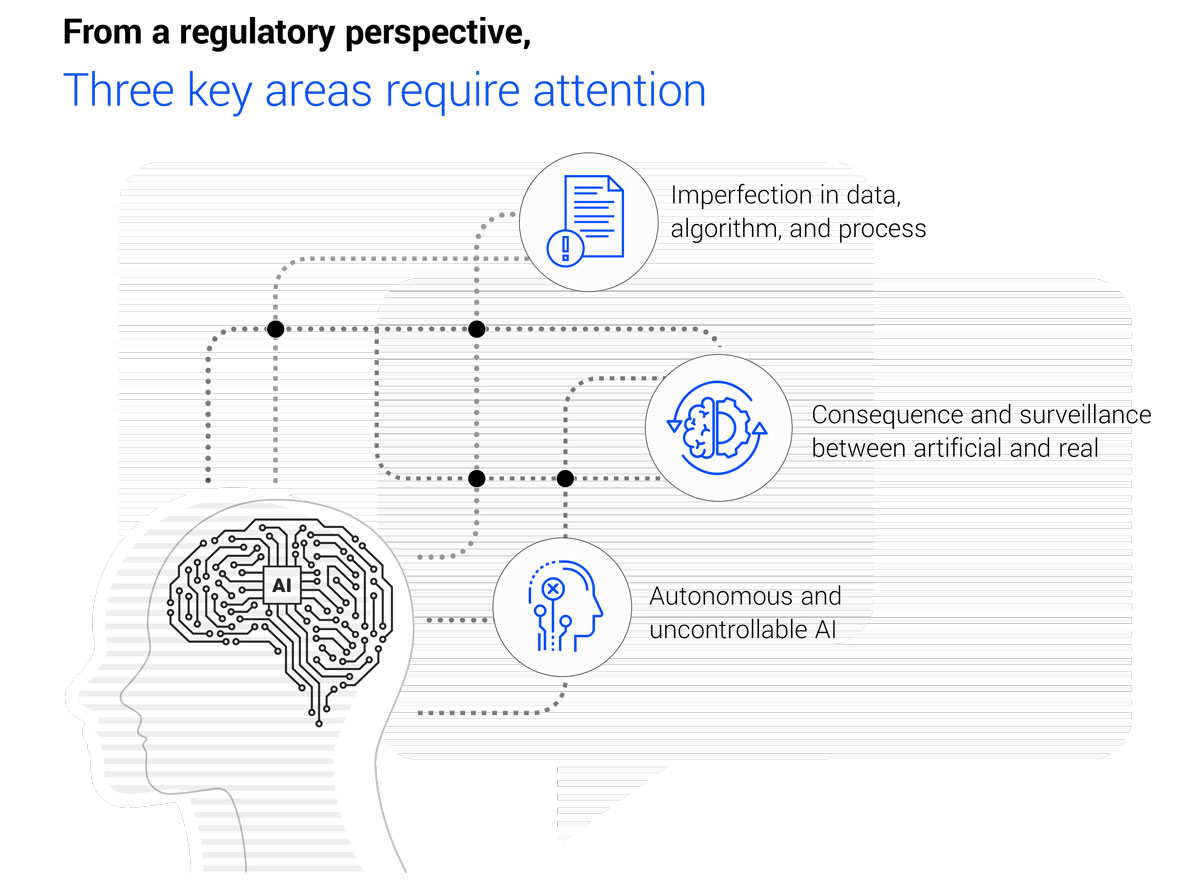

Imperfection in data, algorithm, and process

Regulations should address processes to validate imperfections within the data, algorithms, and processes used in generative AI. This includes addressing AI bias resulting from biased or inadequate training data, as well as algorithmic biases that may perpetuate unfair or discriminatory outcomes. Regulations should promote transparency and the documentation of processes to ensure explainability, accountability and enable scrutiny of generative AI systems including safeguarding intellectual property, data privacy, and preventing ‘hallucinations’.

Consequence and surveillance between artificial and real

Distinguishing between AI-generated content and genuine human-generated content, while challenging, can be critical in certain applications. Regulation should address the consequences and surveillance aspects of this issue. Clear guidelines can determine when it is necessary to label AI-generated content and who is responsible for identifying and authenticating it, such as content creators and platforms. Robust verification mechanisms, such as watermarking or digital signatures, can aid in identifying authentic content, increasing transparency and accountability.

Autonomous and uncontrollable AI

An area for special caution is the potential for an iterative process of AI creating subsequent generations of AI, eventually leading to AI that is misdirected or compounding errors. The progression from first generation to second and third generation AI is expected to occur rapidly. The fundamental requirement of the self-declaration of AI models, where each model openly acknowledges its AI nature is of utmost importance. However, enabling and regulating this selfdeclaration poses a significant practical challenge. One approach could involve mandating hardware and software companies to implement hardcoded restrictions, allowing only a certain threshold of AI functionality. Advanced functionality above such a threshold could be subject to inspection of systems, audits, testing for compliance with safety standards, restrictions on degrees of deployment and levels of security, etc. Regulators should define and enforce these restrictions to mitigate risks.

Regulations should address the potential risks associated with AI systems becoming uncontrollable or operating outside desired parameters. Frameworks for human oversight, intervention, and accountability should be established. This involves maintaining a human in the loop for critical decision-making, implementing safeguards against AI systems going rogue, and designing mechanisms for monitoring and accountability in autonomous AI systems. Another pathway to ensure AI control is through the explainability of AI models, potentially necessitating regulatory mandates. By demanding explainability, we gain insights into the decision-making processes of AI models, enabling intervention and control.

By establishing open guidelines and fundamental principles, we can also work to avoid individual countries and jurisdictions coming up with uncoordinated and conflicting responses.

In summary, balancing the immense potential of generative AI with its risks requires comprehensive regulation. Regulations should encourage collaboration, research, and development while providing safeguards against potential harms. Collaboration between policymakers, AI researchers, industry experts, and ethicists is crucial for establishing guidelines that strike a balance between innovation and responsible use of generative AI technologies. This will ensure that generative AI contributes positively to society while safeguarding against potential risks.