AWS based data ingestion solution for a UK online retailer

Marketing communications play a vital role in engaging customers and strengthening their relationship with the business. However, the marketing spend can achieve its objective only if it is optimised and spent wisely to promote the right behaviour from the right customers. A primary requirement for this optimisation is accurate data explaining how customers respond to various marketing communications made across different marketing channels.

Our client, a leading UK online retailer, had a well-evolved CRM marketing ecosystem rooted in data insights and powered by all major channels – Email, SMS, Push being the key ones. However, a gap in this system was that the feedback data for push channel was missing. As a result, the usage of push channel was sub-optimal. The client partnered with EXL to address this weakness as app engaged customers are generally stickier and more valuable.

Our solution

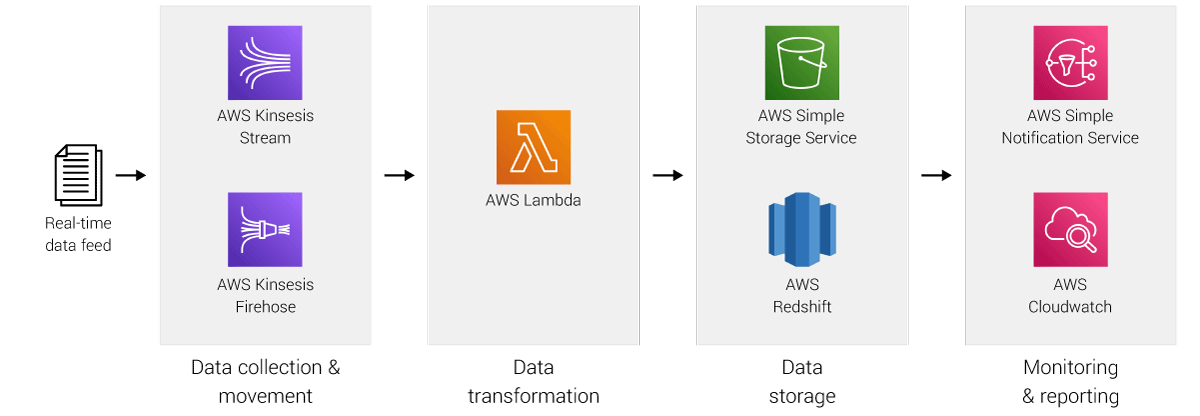

EXL helped the client in building an AWS based solution to ingest, transform, and store feedback data for the push notification messages deployed via their marketing vendor. The solution is fed by real-time data feed from the vendor and uses core AWS services such as Kinesis Data Stream, Kinesis Data Firehose, Lambda, Simple Notification Service, Redshift and Simple Storage Service.

Following are some salient features of the solution

Third party integration

The first step was to integrate the client’s AWS Cloud Platform with the vendor’s ecosystem to receive the data feed. AWS Access Key, AWS Access Secret, and Kinesis Stream Name were used to build this connection.

Solution architecture

The data feed from the vendor is received through AWS Kinesis Data Stream which triggers a Lambda function. The Lambda function is configured to run every minute and create a batch of records received in the last one minute. It transforms and segregates the data based on the push event (open, click etc.) and routes to the corresponding AWS Kinesis Data Firehose delivery stream. The processed data is then forwarded to S3 bucket and stored as JSON file. As soon as the file lands in S3 bucket, another Lambda function is triggered which loads the data in Redshift database tables.

Pattern matching on the S3 key is used to determine the destination table.

The solution has been expressed as a Python code and Terraform has been used for Infrastructure-As-A-Code to simplify deployment in different environments. The version controlled code repository has been maintained in BitBucket.

Alerting & monitoring

SNS notification system has been setup to inform the stakeholders through email notifications in case of a failure in execution of the Lambda functions. All logs and key metrics such as the number of messages processed, data volume, lambda invocations, error counts etc. are also captured through a CloudWatch dashboard.

AWS services used in the solution

Solution testing

Testing is an important component of an effective solution development to ensure its reliability, identify and remediate defects/bugs, and to build the stakeholder confidence to consume the solution for driving desired business outcomes. We conducted Unit Testing and the Load Testing for our solution.

1. Unit testing

The main purpose of unit testing is to ensure that each unit of the solution performs as expected. This was done by the developers during the build phase. Pytest, a python based testing framework, was used to write and execute test codes. The comparison of the expected output with the actual output was done to ensure the credibility of the solution unit.

2. Load testing

Load testing helps to measure the expected performance of the solution when operating in the production environment and handling the live data load. Since our solution was being built to ingest a large number of records in near real-time, we ensured to carry out comprehensive load testing. We used Locust, a load testing tool written in Python, to run the scenarios and evaluated the expected performance.

User access

Most of the teams in the client organisation use SAS to access data for their initiatives. Therefore, a connection was made between SAS and the Redshift database for the users to access the ingested push data tables. In addition, we also created Power BI dashboards fed by the push data tables to enable quicker insights for the business in a self-serve fashion.

Project management

EXL’s team consisted of Solution Architect, Data Engineers, Business Analyst, and QA Engineer, and worked alongside the client counterparts to design, build, and deploy the solution. We operated in an Agile way of working and delivered the end-to-end tested solution in six fortnightly sprints. We used Jira as the project management tool and its easy integration with BitBucket (used for our version controlled code repository) helped to improve the team’s productivity. Agile best practices such as daily stand-ups, sprint planning sessions, fortnightly demos and retro sessions helped in providing an effective communication and governance framework.

Outcome

Working alongside the client partners, we were able to deliver the solution in a short span of 3 months. The solution is able to perform near real-time ingestion of the push feedback data and processes approximately 1.5 million records daily. The notifications process keeps the stakeholders informed on any issues and calls for intervention. The design is highly modular which makes it very easy for any required trouble shooting as well as for any future enhancements.

Next steps

The push feedback data is now available to be incorporated in various decisioning processes. Following are some high priority use cases identified by the business to improve the marketing performance –

- Upgrade customer channel preference models to reallocate channel spend based on the customer’s preferred means of communication

- Deploy push communications at the customer’s preferred time when the response likelihood is high

- Upgrade attribution models to better understand the value of various channels and touchpoints